| Time (UTC) | Mon. 12 | Tue. 13 | Wed. 14 | Thu. 15 | Fri. 16 |

|---|---|---|---|---|---|

| 8:00 – 9:30 | Lab PS-1 (slides, video) | Lecture 1 (video) | Lecture 5 (slides, video) | Lecture 8 (slides, video) | Lecture 12 (slides) |

| 10:00 – 11:30 | Lab PE-1 (slides, video) | Lecture 2 | Lecture 6 (slides, video) | Lecture 9 (slides, video) | Lecture 13 (video) |

| 12:00 – 13:30 | Lab PS-2 | Lab PS-3 (repeat) | Lab PE-2 | Overview Lecture A (slides, video) | Overview Lecture B (video) |

| 14:00 – 15:30 | Lab PS-2 (repeat) | Lecture 3 (slides, video) | Lab PE-2 (repeat) | Lecture 10 (slides, video) | Lab PE-3 |

| 16:00 – 17:30 | Lab PS-3 | Lecture 4 (slides, video) | Lecture 7 (slides, video) | Lecture 11 (slides, video) | Lab PE-3 |

Overview Lectures

B: Lessons From Researching, Developing, and Deploying Autonomous Mobile Robots

Manuela Veloso

Head JPMorgan Chase AI Research, Professor at Carnegie Mellon University

Labs

To accomodate for timezones:

- Lab material will be sent out in advance.

- Participants will work in groups over a longer time period.

- Asynchronous chat will be available, e.g. a School #Slack channel, where participants and speakers can ask questions and discuss the material.

- The online session will provide additional Q&A and a work-through of model solutions.

Lab 1: Plan Synthesis will be an introduction to automated planning and domain modelling in PDDL. It will be delivered by Michael Cashmore and Gerard Canal.

Lab 2: Plan Execution will be an introduction to ROS and plan-based robot control. It will be delivered using theconstructsim. The lab was developed and will be delivered by Oscar Lima, Gerard Canal, and Stefan Bezrucav.

Additional information on the labs can be found using the links at the top of this page.

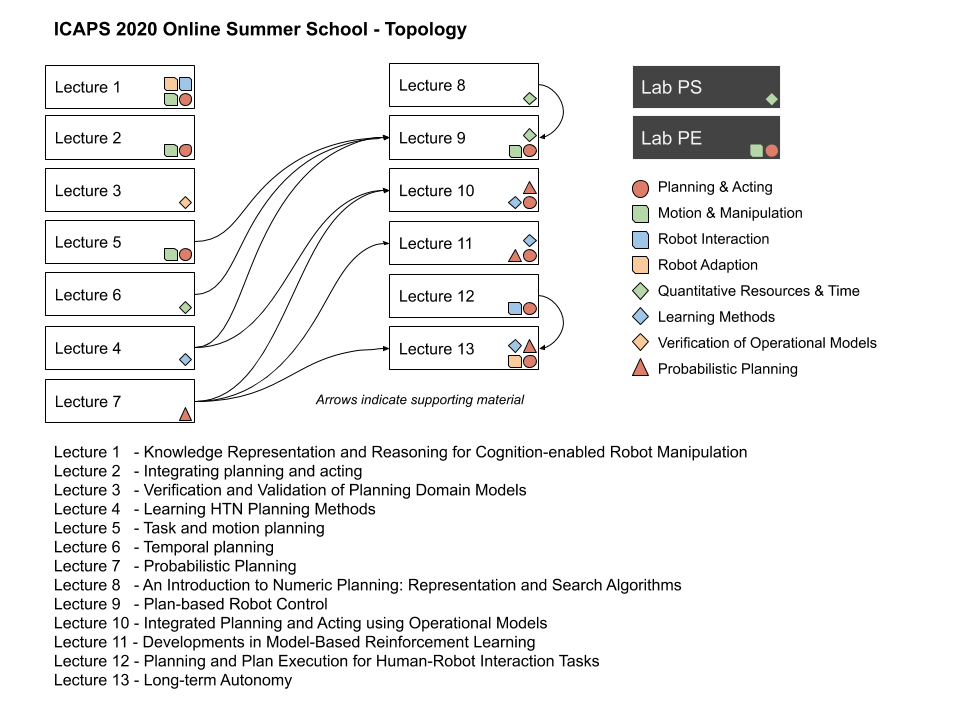

Lectures

To accomodate for timezones Lectures will be recorded and available online where possible.

Lecture 1. Knowledge Representation and Reasoning for Cognition-enabled Robot Manipulation

Michael Beetz

University of Bremen

Robotic agents that can accomplish manipulation tasks with the competence of humans have been the holy grail for AI and robotics research for more than 50 years. However, while the fields made huge progress over the years, this ultimate goal is still out of reach. IOne reason is that the knowledge representation and reasoning methods that have been proposed in AI so far are necessary but still too abstract.

In this tutorial I propose to endow robots with the capability to mentally “reason with their eyes and hands”, that is to internally emulate and simulate their perception-action loops based on photo-realistic images and faithful physics simulations, which are made machine-understandable by casting them as virtual symbolic knowledge bases. These capabilities allow robots to generate huge collections of machine- understandable manipulation experiences, which they can then generalize into commonsense and intuitive physics knowledge applicable to open manipulation task domains. The combination of learning, representation, and reasoning will equip robots with an understanding of the relation between their motions and the physical effects they cause at an unprecedented level of realism, depth, and breadth, and enable them to master human-scale manipulation tasks. This breakthrough is achieved by combining simulation and visual rendering technologies with mechanisms to semantically interpret internal simulation data structures and processes.

Lecture 2: Integrating Time-Constrained Planning and Acting

Tim Niemueller

X, The Moonshot Factory

The objective for task planning is to generate plans which are executable and effect some desired change in the environment. In robotics, this means operating in a physical environment, simulated or real. While in planning research generating a feasible plan can be enough, especially in fields such as robotics this plan must be executed efficiently and robustly to be of value. In this lecture, we will focus on existing execution systems and their integration with task execution, often encountered modeling gaps, and how planning can be used at execution time to deal with new information and failures for more resilience and requiring especially short planning times.

Lecture 3. Verification and Validation of Planning Domain Models

Jeremy Frank

Intelligent Systems Division – NASA

Automated planning is central to space mission operations, autonomous systems, and many other applications. Model-based planning software is well known to this audience; a model is a description of the objects, actions, constraints and preferences that the planner reasons over to generate plans. Developing, verifying and validating a planning model is, however, a difficult task. Planning constraints and preferences arise from many sources, including simulators and engineering specification documents. As constraints evolve, planning domain modelers must add and update model constraints efficiently using the available source data, catching errors quickly, and correcting the model. The consequences of erroneous models are very high, especially in the space operations environment. For model-based planning of a cyber-physical system, we can take advantage of the existence of simulators as ground-truth to assist in the verification of planning models. We use a spacecraft as a motivating example, describing the lower level command and data interfaces that such spacecraft often have. We then review the model based planning representation of this spacecraft. We then describe how to use abstractions and refinements to verify the planning model relative to the simulation. This approach integrates modeling and simulation environments to reduce model editing time, generate simulations automatically to evaluate plans, and identify modeling errors automatically by evaluating simulation output. While detecting errors is possible, fixing errors is a challenge that remains to be addressed. We conclude with some remarks about scalability, derived from a Lunar scientific spacecraft (LADEE), which provided the motivation for early work in this area.

Lecture 4. Learning HTN planning methods

Hector Munoz-Avila

Lehigh University

Humans learn complex skills by first acquiring simple skills, which then are combined to learn more complex ones. As a result, many cognitive architectures use hierarchical models representing relations between skills of different complexity. One of the effective ways to model hierarchical planning knowledge is Hierarchical task networks (HTNs). HTNs have been shown capable of representing problem-solving knowledge on a variety of tasks such as web service composition, strategic military planning, and narrative generation. These domains have in common an underlying hierarchical structure that enables the partition of the problem into smaller, more manageable subproblems, each of which can be solved and combined into a solution for the larger problem. HTN planning’s performance hinges on a robust domain description, which consists of the action model and the task model. The action model encodes knowledge about valid actions or primitive tasks changing the world state. The task model encodes knowledge about how to decompose tasks, and is the part of the domain description that has been argued to be difficult to obtain. In this talk I present an overview of research on automated learning of HTN domain descriptions on a variety of settings including fully observable, partially observable, deterministic and nondeterministic domains, using a variety of techniques including goal regression, inductive learning techniques, constraint satisfaction and more recently, word embeddings. We will also present recent work on learning hierarchical models using deep learning techniques.

Lecture 5. Task and Motion Planning

Fabien Lagriffoul

Örebro University

Task and Motion Planning (TAMP) addresses the problem of computing, given a symbolic goal description, a sequence of symbolic actions AND motion paths for one or several robots to achieve that goal. Although Task Planning and Motion Planning have respectively developed efficient algorithms, combining both types of planning results in a different planning problem, which cannot be efficiently solved by naively combining both techniques. In this lecture, we will first define the TAMP problem(s), examine the difficulties which arise when combining symbolic and geometric search spaces, review some approaches to tackle this problem, and give a brief overview of the future directions in this field.

Lecture 6. Temporal Planning

Andrea Michelli

Fondazione Bruno Kessler

Arthur Bit-Monnot

INSA Toulouse & LAAS-CNRS

Temporal planning is the problem of synthesizing a course of actions to reach a desired goal given a formal description of a system detailing its possible evolutions in time. Temporal planning requires solving the planning problem of deciding which activities to perform in order to reach a goal, together with the scheduling problem of deciding when to execute them in order to fulfill temporal constraints such as deadlines, durations and synchronizations. In this lecture, we will start by introducing the temporal planning problem with its main applications and the theoretical complexity. We will discuss temporal reasoning formalisms to represent and query temporal knowledge. We will then survey both state-oriented and time-oriented approaches, highlighting the major available techniques and tools as well as the principal research results.

Lecture 7: Probabilistic planning

Scott Sanner

University of Toronto

This tutorial provides an introduction to planning under uncertainty based on the framework of Markov Decision Processes (MDPs). After motivating the basic MDP definition, the tutorial will cover a variety of fundamental solution methods for probabilistic planning in MDPs.

Lecture 8. An Introduction to Numeric Planning: Representation and Search Algorithms

Alfonso Gerevini

University of Brescia

Enrico Scala

University of Brescia

This lecture will provide an introduction to a class of (model-based) numeric planning problems that uses both propositional and numeric state variables to specify the problem actions, states and goals. The lecture will present the standard language PDDL2.1 that planning researchers and practitioners use to represent such problems, and the fundamental algorithms that are used in state-of-the-art planners supporting model-based numeric planning. The presentation will focus on those techniques that are based on an explicit exploration of the states space, and on heuristic functions aimed at efficiently and accurately approximate the structure of the problem to provide guidance in the search for both optimal and satisfying plans.

Lecture 9. Plan-Based Robot Control

Joachim Hertzberg

Oscar Lima Carrion

and

Sebastian Stock

DFKI, Osnabrück, Germany

Plan-based robot control (PBRC) comprises action plan generation, but a large list of additional planning topics, too: temporal, spatial, trajectory, and hierarchical planning; plus execution monitoring and control with its many aspects. Based on a short recap about PBRC, the lecture will zoom in on approaches to hierarchical planning and its benefits for PBRC, and on ROSPlan and the status of our integration of hierarchical planners into it. The lecture will also contain demos in simulation about hierarchical robot plan generation and execution.

Lecture 10. Integrated Planning and Acting using Operational Models

Dana Nau

University of Maryland

Sunandita Patra

University of Maryland

AI planning research has traditionally conceived of planning in environments where each action is a simple atomic event represented by a “descriptive model” (e.g., a PDDL model) specifying what the action’s outcome will be. In contrast, for an actor that executes the plan, each of the planner’s atomic actions is likely to be a complex activity whose details are described by an “operational model” (e.g., a computer program) telling how the action should be performed. Execution of these operational models may occur in an open and dynamically changing world in which unforeseen events require quick replanning. Thus it may be essential for the planner to run online, in tight integration with the actor’s reasoning processes.

We will present such an actor and a planner, and show how to use them. The actor, RAE, uses a set of hierarchically organized operational models called refinement methods. The planner, UPOM, runs online as a subroutine of RAE. Rather than using a separate set of descriptive models, UPOM plans by performing RAE’s refinement methods in a simulated environment, using a Monte Carlo Tree Search (MCTS) approach. Further information about RAE and UPOM is available in [1].

Tutorial attendees will be given copies of RAE, UPOM, and instruction in how to use them, along with operational models and test data for several different scenarios.

[1]

Lecture 11. Developments in Model-Based Reinforcement Learning

Alan Fern

Oregon State University

Model-Based Reinforcement Learning (MBRL) is a research topic that relates the fields of automated planning and reinforcement learning. MBRL agents are characterized by an explicit attempt to learn world models from either online or offline data and use those model to improve the reinforcement-learning process. This lecture will give a brief historical review of MBRL and then review some of the recent trends in both online and offline MBRL. The lecture will also offer perspectives on fruitful research directions for more powerfully marrying MBRL with work from the automated planning community.

Lecture 12. Planning and Plan Execution for Human-Robot Interaction tasks

Ron Petrick

Heriot-Watt University

Luca Iocchi

Sapienza University of Rome

In this lecture, we will present an overview of problems and solutions arising when automated planning techniques are used to model scenarios in which humans and artificial agents are involved to achieve some common goal. In particular, we will describe the use of epistemic planning to explicitly model agent’s knowledge about humans and issues related to the execution of plans mixing agent and human actions.

We will provide examples in Human-Robot Interaction domains and provide pointers to frameworks and tools that can be used to develop practical solutions in these domains.

Lecture 13. Long-term autonomy

Nick Hawes

Oxford Robotics Institute

Long-term goal-driven robotic autonomy requires planning to enable an autonomous robot to adapt its behaviour to the variations that occur in its tasks and environment. I this talk I will present our approach to planning for long-term autonomy which combines Markov decision processes, probabilistic verification, and machine learning. This approach has been used to guide years of robot behaviour in real world deployments. I will also present a number of recent developments that extend our basic approach to address, multiple robots, multiple objectives, partially known environments, and planning for human interventions.